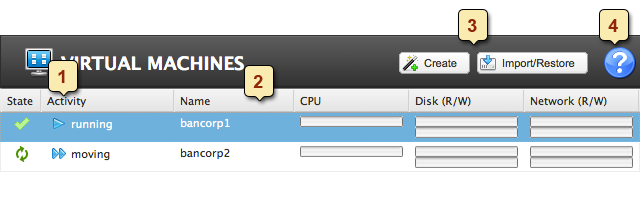

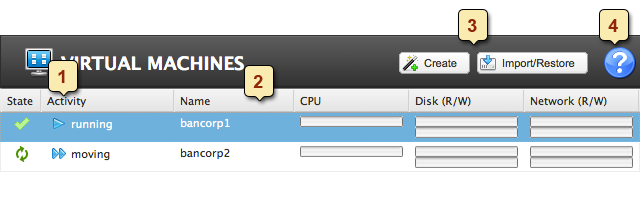

Virtual Machine Page Overview

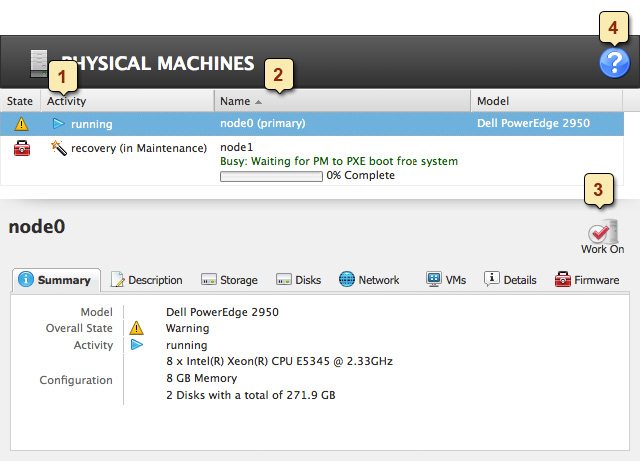

The Interface

- States & Activities Running, Maintenance, Off, Broken, Booting, etc.

- Virtual Machines Select to view, double click to rename

- Buttons to create, import and manage virtual machines

- Contextual Help and Troubleshooting.

An Avance Unit may host multiple virtual machines (VMs) running a variety of OS versions and applications. You can create and manage VMs from the Virtual Machines page of the Avance Management Console.

- In the Avance Management Console, click Virtual Machines.

- Click Create VM, Import/Restore VM install virtual machines or

- Select a VM to view state/configuration information or perform maintenance.

- Click the tabs to view configuration details.

Creating VMs

The VM Creation Wizard is launched by clicking on the  , from Virtual Machines page. The Wizard will step you thru the process of allocating CPU, Memory, Storage and Network resources to the VM.

, from Virtual Machines page. The Wizard will step you thru the process of allocating CPU, Memory, Storage and Network resources to the VM.

Before starting the wizard please review the following materials and considerations

- Preparing a installation image:

- Creating a VCD or

- Registering Linux Repositories and Kickstart Files.

- Allocating Virtual CPUs

- Allocating Memory

- Allocating Storage

Linux installation reboots twice, and closes the Console window. To continue monitoring, select the VM on the Virtual Machines page. Click Console. Make sure you change the VM’s time zone to match that in the Avance Management Console. Otherwise, the VM’s time zone will change whenever VMs restart or migrate. Install the PV drivers immediately after creating a Windows VM. These drivers are needed for correct VM operation, proper VM migration between PMs under fault conditions, and good network connections.

Creating a Virtual CD

The Virtual CD Creation Wizard installs an ISO image to a storage device on the Avance unit. This image is then available to the VM Creation Wizard as a virtual CD (VCD). This procedure can also be used to create VCDs required for installing applications.

- Navigate to the Virtual CDs page in the Avance Management Console.

- Click to

launch the VCD Creation wizard.

launch the VCD Creation wizard.

- Select an install source.

- Local storage (via upload).

- Network source.

- Physical CDrom/DVD drive.

Exporting VMs

The Export process exports a copy of all the chosen volumes along with the VM’s configuration (stored as a OVF file). The OVF file can then be used to Import/Restore the VM image. Preparation (Windows only)

- Make sure that all volumes are labeled accurately as outlined in Windows Drive Labeling.

- Execute the Windows sysprep command.

Required Steps:

- Shutdown the Virtual Machine you want to export.

- With the Virtual Machine selected, click the Export button in the details section.

- Click Browse, give a name and location to store the OVF file you are about to export.

- Review Volumes to Capture, make edits/customizations as you see fit.

VM Configuration Only. Choosing this will include only the configuration details of a Volume but not its data in the export file.

- Click Export to start the export.

Snapshots

Snapshotting is a mechanism to minimize downtime while exporting a VM. A Snapshot is an image of a stopped VM at a particular point in time, which can then be exported. How is this different from Export? Snapshot, unlike Export, creates a copy of the VM volume(s) and configuration on Shared Mirror(s) within the Avance unit. Since the process is internal the VM is down for a shorter period of time as compared to VM Export. Snapshot limitations

- When a snapshot is created, an image of the stopped VM is created within the same shared-mirror(s) Therefore it is important to make sure that each shared mirror has at least the same amount of free space as the size volume you are trying to snapshot.

- Snapshot can cause lag in the VM’s performance. It is recommended that you delete the snapshot soon after it has been exported.

Creating Snapshots

- Shutdown the Virtual Machine you want to snapshot.

- With the Virtual Machine selected, click the Snapshot button in the details section

- Select the volumes you want capture and then click Create Snapshot

Exporting Snapshots

- With the Virtual Machine selected, click Export in the detail section

- In the dialog, choose Export snapshot

- Click Browse, and choose a location for the export file

- Click Export to start the export.

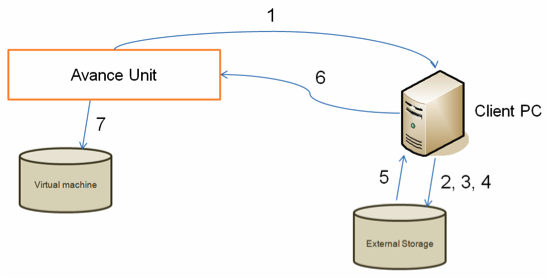

Import/Restore VMs

The Import/Restore process creates a new VM, or replaces an existing VM, from an OVF file. Import assigns a new and unique hardware ID and network interface MAC addresses to the VM. Restore attempts to preserve hardware ID and MAC addresses.

Import/Restore a VM from an Avance Source

VM Import can be used to clone a VM using an existing OVA or OVF exported from an Avance unit.

- On the Virtual Machines page, click Import/Restore.

- Browse to and select the OVA/OVF export file.

- Review the information and make any desired edits.

Name, CPU and Memory.In this section you can change the name of the importing VM, edit the number of VCPUs and allocate the total memory it can use. Storage.Shows all the volumes, their size and shared-mirror destinations. Check the Create box to carve out a storage container for that volume. Check the Restore Data box in order to import the data from the VM’s backup file. Network.Displays all the available networks. You can choose to remove or add one that is not already there.

- Click Import/Restore when you reach the end of the dialog to start importing/restoring the VM.

Import a Windows VM from a non-Avance Source

This import process creates a Windows VM from a non-Avance source from an OVF file created using XenConvert 2.1. See Windows P2V or V2V: Creating an OVF from a non-Avance source. To download XenConvert 2.1, go to the Avance Customer or Partner Portal and navigate to the Downloads page. To import, the selected OVF file (boot volume) and all associated VHD files (additional volumes) must be in the same directory, and no other VHD files can be in that directory. Windows will only recognize up to three drives until PV drivers are installed in the VM.

- On the Virtual Machines page, click Import/Restore.

- Browse to and select the OVA/OVF export file.

- Review the information and make any desired edits.

Name, CPU and Memory.In this section you can change the name of the importing VM, edit the number of VCPUs and allocate the total memory it can use. Storage.Shows all the volumes, their size and shared-mirror destinations. Check the Create box to carve out a storage container for that volume. Check the Restore Data box in order to import the data from the VM’s backup file. Network.Displays all the available networks. You can choose to remove or add one that is not already there.

- Click Import/Restore when you reach the end of the dialog to start importing/restoring the VM.

P2V only: Disable any services that interact directly with hardware. These services are not warranted in a virtualized environment. Examples include:

- Dell OpenManage (OMSA)

- HP Insight Manager

- Diskeeper

V2V only:Disable the following services:

- VmWare tools

- Hyper-V tools

Import a Linux VM from a non-Avance Source

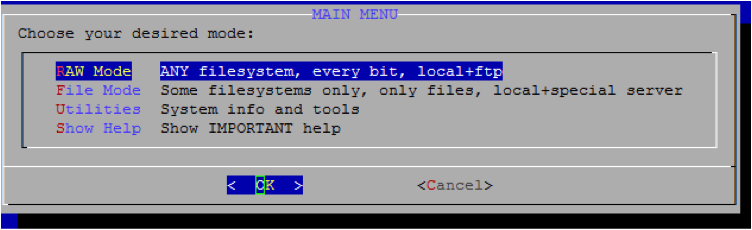

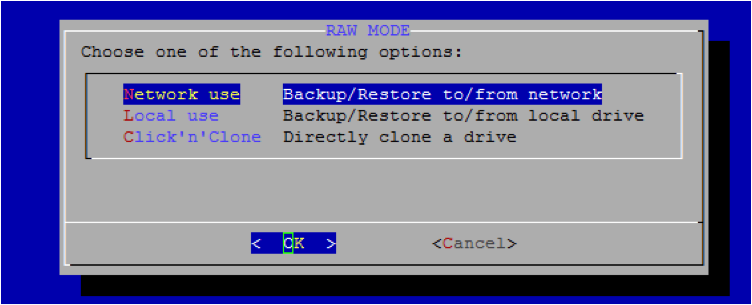

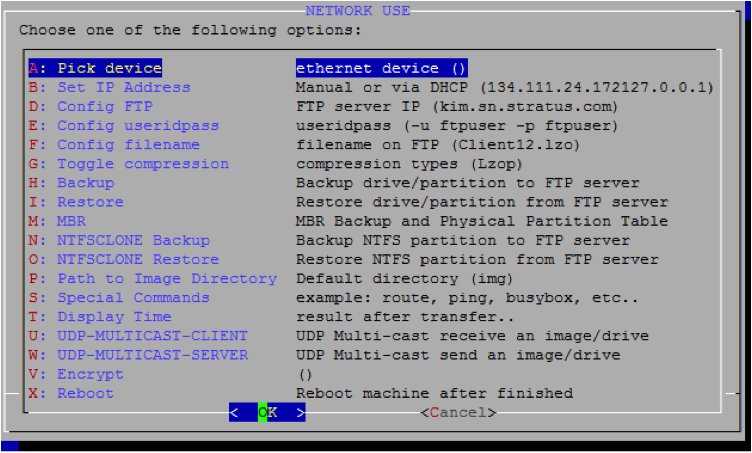

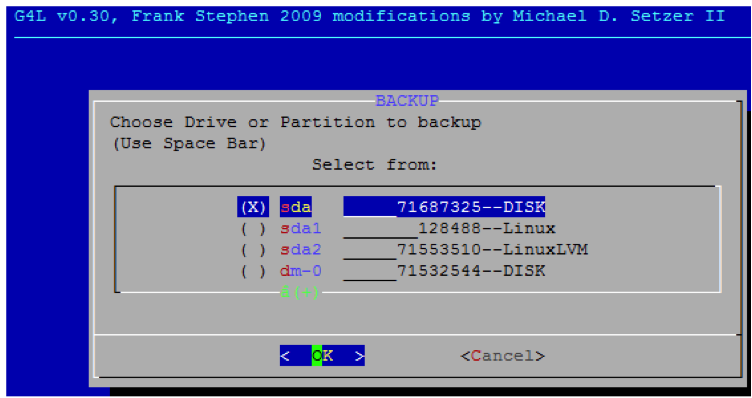

The Linux import process creates a VM from a non-Avance source from an OVF file created using the open source tool G4L. See the instructions in the Linux P2V or V2V section of the Avance P2V, V2V and VM Cloning guide available from the Download page at http://avance-productinfo.stratus.com.

Windows Based VMs

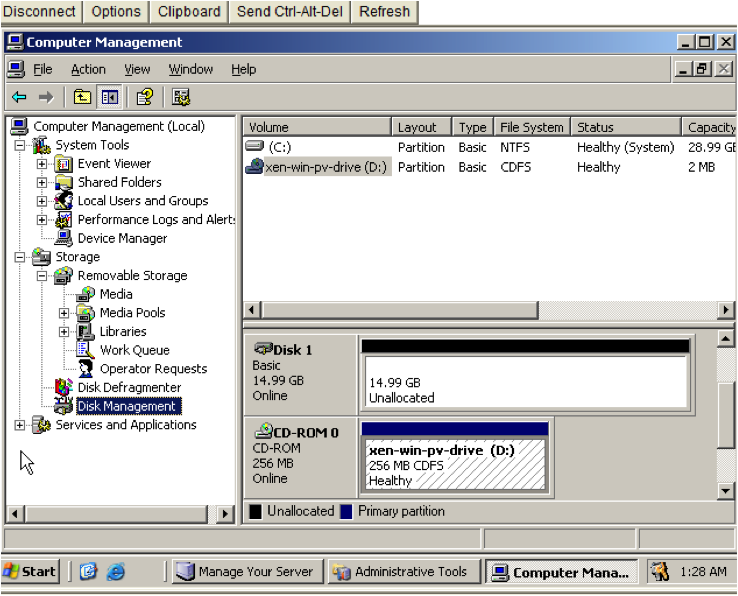

Installing Windows Para-Virtualized Drivers

Avance includes “para-virtualized” (PV) network and disk drivers designed to maximize Windows VM performance:

- Significantly enhance performance for network and storage subsystems.

- Enable use of Windows disk configurations with more than three volumes.

- Ensure that under fault conditions, VMs properly migrate from the failed to the operating PM (node) without interruption.

Installing or upgrading para-virtualized (PV) drivers is required for the proper operation of a Windows virtual machine (VM) on an Avance system. You can accomplish this by inserting the xen-win-pv-drivers-x.x.x CD into the virtual machine and then logging into the VM to AutoPlay/AutoRun the CD drive (normally D:) by double-clicking or right-clicking the drive in an Explorer window. AutoRun executes the AvanceXenSetupAssistant which will guide you through the installation (or upgrade) of PV drivers on a VM. Procedure:

- In the Avance Management Console, click Virtual Machines.

- Select the Windows VM.

- Click the CD Drives tab.

- Click Eject CD to remove any CD listed.

- Select xen-win-pv-drivers—x.x.x. Click Insert a CD.

- Connect to the new Windows VM using the Avance Management Console’s VM console or another remote desktop application, such as Windows Remote Desktop Connection.

- To use the VM console: Click Virtual Machines. Select the VM. Click Console.

- In the Windows VM, open My Computer.

- Double-click on xen-win-pv-drive (D:) to AutoRun the AvanceXenSetupAssistant. See discussion below.

- Accept the license agreement.

- Type the installation path. Click Install. The Reboot now prompt appears.

- In the Avance Management Console, click CD Drives. Click Eject CD.

- When prompted, click Reboot now. Click Finish. Do not delay restarting.

The virtual disk volumes appear in the VM and are used as if they were physical disks. For more information, see “Windows VMs: Accessing Newly-Created Disks“. Microsoft’s .NET framework (version 3.5 or 4) must be installed on a VM in order to install the PV drivers. If there are no pre-installed versions of .NET on your VM, the AvanceXenSetupAssistant will attempt to install .NET 4 automatically for you and then proceed with the PV install. However, if AvanceXenSetupAssistant detects that .NET is already installed it will not modify .NET and will proceed directly with PV install. If PV install subsequently fails then messages will appear when XenSetup.exe is run by AvanceXenSetupAssistant. To fix this, you will need to install or upgrade .NET 3.5 or 4 manually and then restart the AutoRun process. Note 1: Recent versions of Windows 2008 or Windows 7 may confuse AvanceXenSetupAssistant and may appear to have a pre-installed .NET installation when there is none. Please consult Microsoft documentation on proper procedure for installing or upgrading .NET on your VM and then perform the AutoRun again. Note 2: Avance provides the .NET 4 installer on the xen-win-pv-drivers CD. You can double-right-click on the dotNetFx40_Full_x86_x64 file to start the .NET 4 installer if you decide to use .NET 4 on your VM. If this succeeds, return to step 8, above. If .NET installation fails, please see note 3. Note 3: A Windows 2003 or Windows XP VM may require that the Windows Imaging Component (WIC) be installed prior to the .NET installation. You can double-right-click on the wic_x86_enu or wic_x64_enu file to install WIC on your VM (only one of these will match your VM, but starting the other one is harmless). After WIC is installed, the .NET 4 installer can be run (return to note 2).

If you install a Windows Server 2008 VM, disable hibernation (enabled by default in some cases).

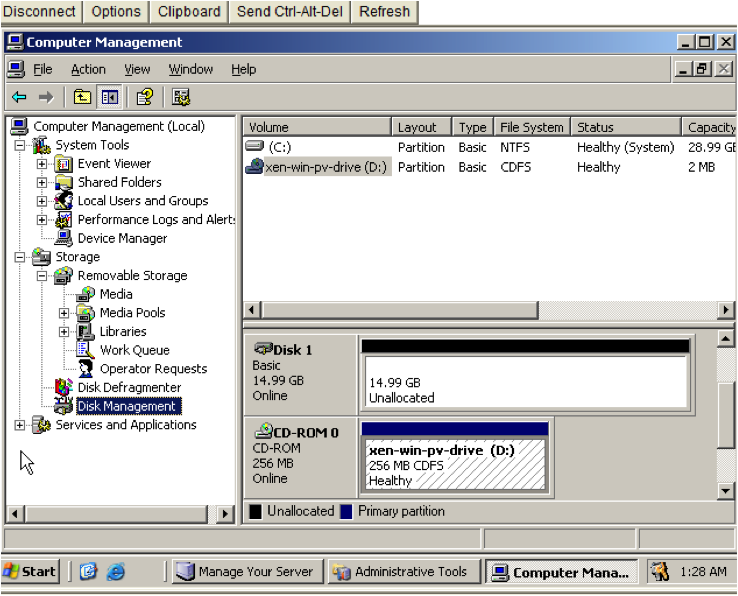

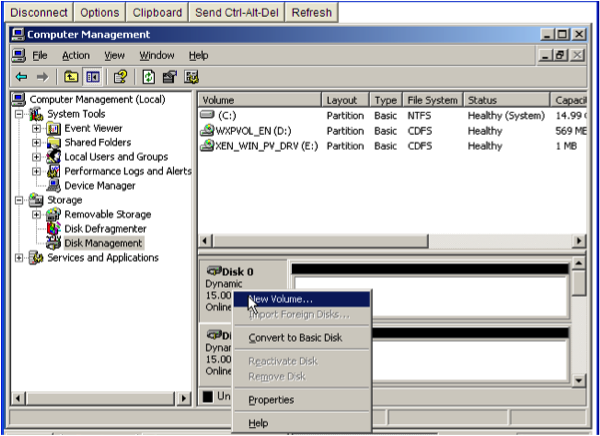

Accessing Newly-Created Disks

To format new drives

- Use a remote desktop application to connect to the VM.

- Select Start >Administration Tools>Computer Management.

- Select Disk Management.

- If the Initialize and Convert Disk Wizard does not start, right-click a disk and select Convert to Dynamic Disk.

- If prompted to initialize disks, select the disks. Click Next.

- When prompted, select the virtual disks to convert to dynamic disks. Click Next.

- Click Finish to start creating volumes.

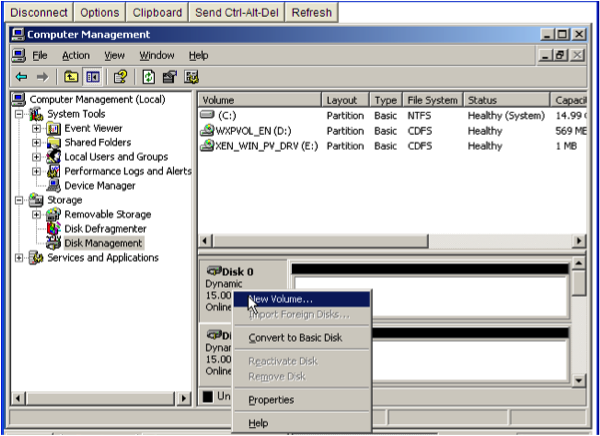

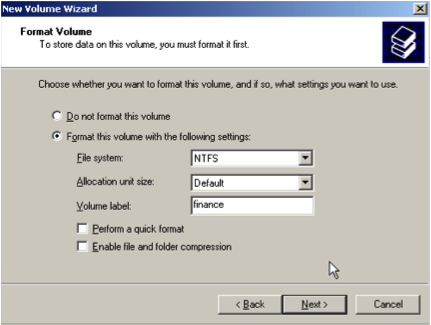

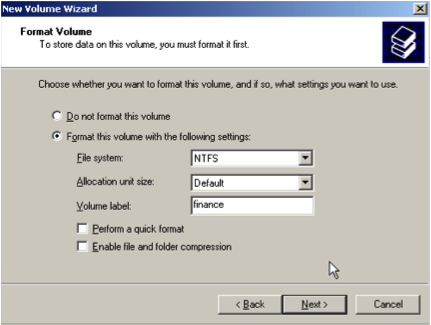

To create a new volume Windows disk volumes in the VM are created on the virtual disk volumes defined when you created the VM.

- Use a remote desktop application to connect to the VM.

- Select Start >Administration Tools>Computer Management.

- Right-click the virtual disk on which to create a volume. Select New Volume from the menu.

- Click Next

- Select the volume type. Because Avance is already mirroring data at the physical level, volume redundancy is not required.

- Select the virtual disk volumes to allocate to the new VM.

- Select volume format settings. Click Next.

- Review your selections. Click Finish. The new volume appears in Disk Management.

- Restart Windows

Installing Applications on Windows Virtual Machines

You can install applications on a Windows VM from the network, or from an Avance VCD created from an application CD/DVD (see “Creating a VCD“).

Each VCD consumes disk space. Consider deleting VCDs when finished with the installation.

Installing Applications from a VCD

- In the Avance Management Console, click Virtual Machines.

- Select the VM. Click the CD Drives tab.

- Click Eject CD to remove any CD listed.

- Select the VCD installer for the application. Click Insert a CD.

- Connect to the VM from the Console or a remote desktop application.

- The installation CD is in the VM’s CD drive. Install the application following the vendor’s instructions.

- When installation is complete, return to the Avance Management Console. Click Eject CD.

Linux Based VMs

Creating a Linux Repository

As an example of how to create a repository, the following steps describe how to create a CentOS repository, from a distribution on CDs, on a Linux server running Apache.

- If it does not already exist, create a directory to mount the CD-ROM as follows:

$ mkdir -p /mnt/cdrom

- Create a centos directory in the Apache Web root directory. For example, for RedHat® Linux, create the /var/www/html/centos directory:

$ mkdir /var/www/html/centos

- Mount and copy each of the 4 CDs to the directory you just created:

$ mount /dev/cdrom /mnt/cdrom

$ cp –rf --reply=yes /mnt/cdrom/* /var/www/html/centos

Repeat this step for the remaining CDs.

Registering Linux Repositories and Kickstart Files

If your installing a Linux based VM and you wish utilize a repository source:

- Please review your operating system documentation for information on creating, modifying, and using repositories and kickstart files

- Make sure the PMs have network access to the required repositories.

- Identify web-based Linux repositories containing the required third-party software images. For example, CentOS maintains repositories at http://vault.centos.org/.

- Optional: Create a Linux repository and include with the repository kickstart files. For details, see Creating a Linux Repository

Registering Linux Repositories

- In the Avance Management Console, click Linux Repositories.

- Click Add a Repository.

- Type the URL for the repository location.

- Click Finish.

Registering a Kickstart file for a Linux Repository

- In the Avance Management Console, click Linux Repositories.

- Click Add a Kickstart.

- Use Select a Repository to select the repository for which you are specifying a kickstart file. Only repositories registered with Avance are listed.

- Type the Location of Kickstart File (URL) in the form of an URL.

- Type a Name of Kickstart.

- Type a Description of Kickstart.

- Click Finish.

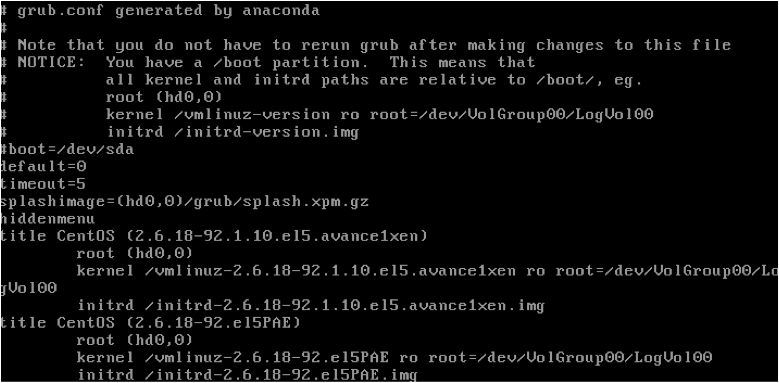

Applying Linux Kernel Patches

If you installed a Linux VM, see the Avance Compatibility Matrix to find supported Linux releases.

- Use the Downloads tab to obtain needed kernel patches (source or binary packages).

- Type these commands:

rpm -ivh --force kernel-patch-file_name.rpm

reboot

Where

kernel_patch_file_name.rpm

is the downloaded patch file.

- To install kernel patches at the same time as a VM:

- Add the patches to the repository and kickstart file.

- Add this command to the post section of the kickstart file:

rpm –i server_name/ kernel-patch-file_name.rpm

Where

server_name

is the repository server.

Creating Disk Volumes in Linux Virtual Machines

To create a new volume in a Linux VM, use the volume management tool or edit files as needed to create volumes in a Linux VM. See your Linux documentation for complete instructions.

In Linux VMs, disk device names are /dev/xvda through /dev/xvdh, instead of the standard /dev/sda through /dev/sdh.

The virtual disk volumes appear in the VM and are used as if they were physical disks.

Installing Applications in Linux Virtual Machines

You can install Linux applications only from a network. Use a remote desktop application to connect to the VM from a management PC and install the application.

Provisioning Virtual Machine Resources

Allocating Virtual CPUs

A virtual CPU (VCPU) is defined as:

- A single physical CPU thread, when Hyper-threading is enabled

- A single physical CPU core, when Hyper-threading is disabled.

Avance supports a maximum of 8 VCPUs per VM. The total number of VCPUs available for multiple VMs running on the Avance unit is dependent on the number of CPU sockets, cores per socket and Hyper-Thread configuration. When Hyper-threading is enabled, Avance will allow you to over-provision by two VCPUs (physical CPU threads). These are the two VCPUs dedicated for the Avance software. Example: Server has two sockets with six cores per socket and two threads per core:

| 24 |

Total Available VCPUs |

| -2 |

VCPUs dedicated for Avance software |

| 22 |

VCPUs available for VMs (Recommended) |

| 24 |

VCPUs available for VMs (over-provisioned) |

When Hyper-threading is disabled, the user can over-provision by more than 2x the number of VCPUs (physical CPU cores). Example: Server (PM) has two sockets with six cores per socket and Hyper-Threading is disabled.

| 24 |

Total Available VCPUs |

| -2 |

VCPUs dedicated for Avance software |

| 10 |

VCPUs available for VMs (Recommended) |

| 24 |

VCPUs available for VMs (over-provisioned) |

Considerations When Over-Provisioning Virtual CPUs

In general Stratus recommends you avoid over provisioning CPU resources. You should only over-provision physical CPUs under the following conditions:

The peak VCPU resources consumed by the combined VMs does not exceed the physical resources of the Avance unit.

- One or more VMs are used at different times (such as off-peak backups).

- Peak total CPU use by VMs will not affect service level agreements or required response times.

- Each VM’s CPU use is well understood, and its application(s) are not prone to resource leaks. When CPUs are over-provisioned, a leak in one VM can affect the performance of other VMs.

If the unit’s capacity is exceeded, each VM is allocated a share of the physical processing capacity proportional to its allocated share of virtual processing capacity. The only way to divert more processing to a specific VM would then be to shut down one or more of the other VMs. To view the Avance unit’s VCPU allocation, click the unit name in the Console. Look at CPU & Memory. CPU utilization per VM can also be viewed on this page by clicking show details under statistics.

Limitations in Windows 2000 cause the Avance Management Console to report inaccurate CPU use, usually too heavy. Instead, use the performance monitoring tool in Windows 2000.

Allocating Memory

Avance does not allow over-provisioning of memory for running VMs. The total memory that can be allocated to VMs is equal to the total physical memory of the PMs, minus 1 GB for the OS. In addition, if the PMs have different physical memory capacities, Avance defines the maximum memory to equal that of the PM with the least memory. For example, if PM1 has 16 GB memory and PM2 has 8 GB, the memory available for allocation to VMs would be:

8 GB (least memory of either PM) – 1 GB for OS = 7 GB

The minimum virtual memory allocation is 256 MB, but 64-bit operating systems require at least 600 MB. If a VM is shutdown, its memory is freed up and can be re-provisioned to other running VMs. However, if that VM is to be returned to service, you must first shutdown or re-configure another VM to free the needed memory again.

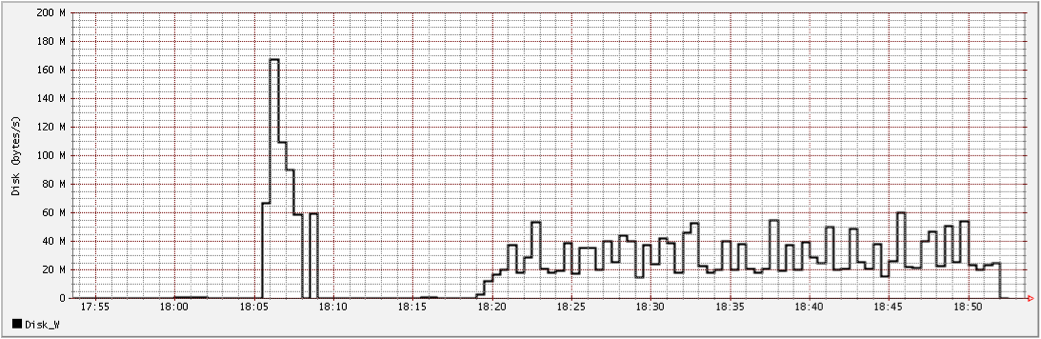

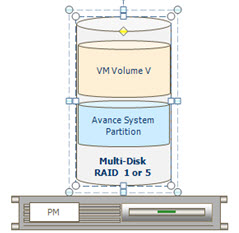

Allocating VM Storage

How you allocate storage can have a dramatic impact on system performance and your ability to fully utilize available capacity. Please map out your storage allocation applying the following considerations.

- Minimize stranded storage: Since Avance volumes cannot span storage groups, plan volume assignments to minimize unusable “stranded” storage. This maximizes free space for new VMs and VCDs.

- Maximum Volumes: The Avance unit can have no more than 62 total volumes for VMs and VCDs

- Leave space for additional VCDs: Leave at least 5 GB of free space in each storage group to allow room to create VCDs for installing additional VMs/Applications.

- Separate boot and data volumes. Separating the boot and data volumes helps preserve the data and makes it easier to recover if the boot volume crashes. Consider putting all boot volumes on one disk, with associated data in separate volumes.

- Balancing storage utilization:

- Click Storage Groups in the left navigation window and select a storage group.

- Click on the Statistics tab and select the desired Time Span to determine the read/write bandwidth demands on each storage group. Place the new volumes in the group with the lowest demands.

- Click on the Volumes tab to review the VM volumes assigned to the group. You can change the sorting on each column and re-order the columns as required.

Allocating Network Resources

Avance pairs physical network ports across the two PMs to form a redundant virtual network interface (VIF). One or more VIFs can be assigned to each VM, and multiple VMs can use the same VIFs. Avance allows unlimited over-provisioning of network resources, so be sure to profile a VM’s network bandwidth/response time requirements before allocating VIFs. There is no way to proportionately allocate bandwidth resources between VMs sharing a VIF. Therefore, high use of network resources by one VM can reduce the performance of all VMs on that network. If a VM has a large bandwidth requirement, consider adding a dedicated NIC for that VM.

Virtual Machine Actions

When you select a VM, the following action buttons can appear, depending on the VM’s state and activity.

| Icon |

Description |

|

Boots the selected VM. |

|

Boots a VM from the selected virtual CD. |

|

Opens a console for the selected VM. |

|

The Exportprocess stores the image of a Windows or Linux VM into a set of OVF and VHD files. These files can then be used as a template for importing, cloning a VM onto Avance units.Open Virtual Machine Format (OVF) is an open standard for packaging and distributing physical or virtual machine data. The OVF format contains meta-data information about the VM.A Virtual Hard Disk (VHD) is a file that contains the virtual disk information.The VM must be shutdown prior to initiating the export |

|

Shuts down the selected VM. |

|

Immediately stops processing in the selected VM and destroys its memory state. Use this only as a last resort, when the VM cannot be successfully shutdown. |

|

Launches the VM Re-Provisioning Wizard. The VM must be shutdown prior to launching this wizard. |

|

Permanently deleted the VM and (optionally) its attached data volumes. |

|

When a VM crashes, Avance automatically restarts it, unless it has fallen below its meantime between failure (MTBF) threshold. If the VM is below the MTBF threshold, Avance leaves it in the crashed state. You can then click this to restart the VM and reset the MTBF counter. |

|

Immediately stops processing of the selected VM, creates a dump of its memory state, and restarts the VM. Use this button only at the direction of your service provider, and only for troubleshooting a hung VM. |

Actions Available During Virtual Machine States and Activities

| State |

Activity |

Enabled Commands |

Description |

Busy Busy |

Installing Installing |

|

Avance software is installing the boot volume for a new VM. |

|

Stopped Stopped |

Start Config Export Boot From CD Remove |

VM has been shutdown or powered off. |

|

Booting Booting |

Console Power Off Dump |

VM is starting. |

|

Running Running |

Console Shutdown Power Off Dump |

VM is operating normally on redundant physical machines |

Alert Alert |

Running Running |

Console Shutdown Power Off Dump |

VM is operating normally, but is not running on fully redundant resources. |

|

Stopping Stopping |

Power Off Remove |

VM is being shut down in response to the Shutdown action, or when the remaining physical machine is transitioning into maintenance mode. |

|

Crashed Crashed |

|

VM crashed and is restarting. If enabled, e-Alerts and Call-Home messages are sent. |

Critical Critical |

Crashed Crashed |

|

VM crashed too many times and exceeded its MTBF threshold. The VM is left in a crashed state until Reset Device is clicked. |

|

Dumping Dumping |

Power Off |

Harvesting crash dump. |

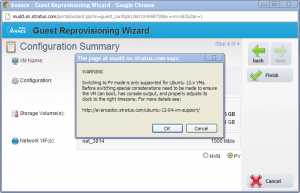

Re-provision Virtual Machines

Use the VM Re-Provisioning Wizard in the Avance Management Console to reconfigure virtual CPUs, memory, storage volumes and networks assigned to the VMs. The Wizard displays current allocations. Modify these or leave unchanged as needed. You can also finish re-provisioning anytime the Finish button is available.

- In the Avance Management Console, click Virtual Machines.

- Select the VM. Click Shutdown.

- When the VM status shows stopped, click Config. The VM Re-Provisioning Wizard opens.

Carefully review all changes prior to clicking Finish as they cannot be reversed after that point in time. Do not allocate less than 256 MB (600 MB for 64-bit systems). Windows 2003 or earlier: If allocating more than 4GB of memory to a VM, make sure your installation supports PAE mode. If you are changing the number of assigned VCPUs in a Windows VM from 1 to n or n to 1, after restarting the VM at the end of the re-provisioning process, you must then shutdown and restart the VM again. This allows the VM to correctly reconfigure itself for Symmetric Multiprocessing (SMP). The VM displays odd behavior and is not usable until it is rebooted.

Re-configuring Volumes:

- Add new volumes or attach existing volumes by clicking Create another volume.

- Click Detach Volume to disconnect a volume from the VM while preserving its data for future use.

- Click Delete Volume to permanently delete the volume and all associated data. Keep Volume undo’s the delete.

Note: You cannot detach or delete boot volumes.

Assigning Specific MAC Addresses to VMs:

Note: It is recommnend that you do not override auto-assigned MAC addresses. This step should only be required in specific cases where a specific MAC address is required, for example on VMs with software that is licensed on a MAC address basis.

- Start the Reprovisioning Wizard

- Step through the wizard till you reach theNetworks page. Here you can view and change the MAC addresses.

- click Finish

Recovering Virtual Machine Resources

To conserve storage space, remove VMs, volumes, and VCDs when no longer needed. You may also need to immediately recover storage when less storage is available than required for certain activities, such as creating a volume or VCD.

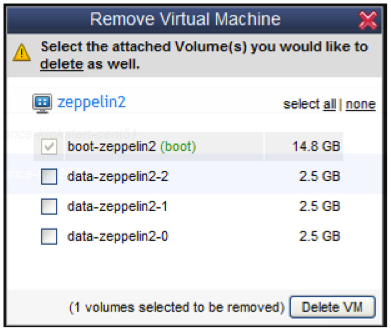

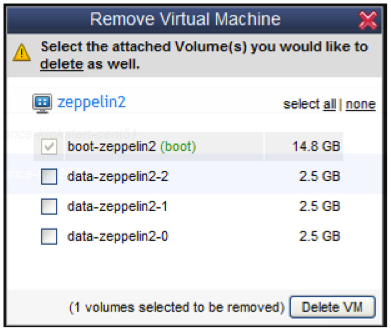

Removing VMs and Data Volumes

- In the Avance Management Console, click Virtual Machines.

- Select the VM to remove

- Click Shutdown.

- When the VM shows stopped, click Remove.

- Select any attached data volumes to remove. The boot volume is always selected. You can leave data volumes for archiving or use by another VM.

- Click Delete VM.

Cleaning Up Virtual Disk Volumes

Before deleting disk volumes, check with the administrator or other users to make sure the volumes are not being purposely saved.

- In the Avance Management Console, click Volumes.

- Note any volumes marked None in the VM column. These are not associated with a VM and so are unused.

- Select any unused volumes to delete.

- Click Remove.

Cleaning Up Unused VCDs

- In the Avance Management Console, click Virtual CDs.

- Note any VCDs showing

in the Can Remove column

in the Can Remove column

- Select a removable VCD.

- Click Remove.

Booting from a VCD

- In the Avance Management Console, click Virtual Machines.

- Select a VM. Click shutdown.

- When the VM status shows stopped, click Boot from CD.

- Select the VCD to boot from. Click Boot.

A VM booted from CD boots as a hardware virtual machine (HVM), and can access only the first three disk volumes.

Troubleshooting Unresponsive VMs

If a Windows VM does not respond to application requests, you can dump its memory to a file for use in troubleshooting.

Windows must be configured to generate crash dump files. See the Microsoft article, How to generate a complete crash dump file or a kernel crash dump file by using an NMI on a Windows-based system (Article ID: 927069). Follow the instructions in “More Information.”

- In the Avance Management Console, click Virtual Machine.

- Select the unresponsive VM.

- Click Dump.

- Retrieve the dump file:

- For Windows VMs: C:WINDOWSMEMORY.DMP.

- For Linux VMs: dump files are not stored in the Linux file structure. Retrieve the dump file by generating a diagnostic file on the Preferences > Diagnostics page of the Avance Management Console (refer to the online help for instructions). Select Dumps or Full.

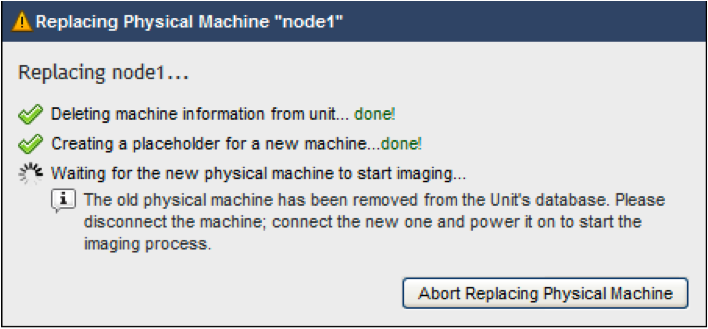

Avance deletes the PM from the Avance unit’s database, then prompts you to replace the PM.

Avance deletes the PM from the Avance unit’s database, then prompts you to replace the PM.